I still remember the first time I came across an anti-flicker snippet…

A client had asked me to look at the speed of their sites for countries in South East Asia, and South America.

The sites weren’t as fast as I thought they should be but then they weren’t horrendously slow either but, something that troubled me was how long they took to start displaying content.

I was puzzled…

When I examined the waterfall in WebPageTest I could see the hero image being downloaded at around 1 second but yet nothing appeared on the screen for 3.5 seconds!

I switched to DevTools and sure enough saw the same behaviour.

Profiling the page showed that even though multiple images were being downloaded quickly, the browser wasn’t even attempting to render them for several seconds.

Hunting through the source I found this snippet (I’ve unminified it to make it easier to read)

<script>

// pre-hiding snippet for Adobe Target with asynchronous Launch deployment

(function(g,b,d,f) {

(function(a,c,d) {

if(a) {

var e = b.createElement("style");

e.id = c;

e.innerHTML = d;

a.appendChild(e)

}

})(b.getElementsByTagName("head")[0], "at-body-style", d);

setTimeout(function() {

var a=b.getElementsByTagName("head")[0];

if(a) {

var c = b.getElementById("at-body-style");

c && a.removeChild(c)

}

},f)

})(window, document, "body {opacity: 0 !important}", 3E3);

</script>

The snippet does two things:

- Injects a style element that hides the

bodyof the document by setting it’sopacityto0 - Adds a function that gets called after 3 seconds to remove to the style element. This is a fallback in incase the Adobe Target script fails or takes a longer than 3 seconds to reach the point where it would remove the style element.

Google Optimize, Visual Web Optimizer (VWO) and probably others adopt a similar approach.

Urgh…

Impact of Anti-Flicker Snippets

Proponents argue that we need anti-flicker snippets because the potential of visitors seeing the page change as experiments are executed is a poor experience and visitors knowing they are part of an experiment can influence the results a.k.a The Hawthorne Effect.

I’ve not found any studies that validate this argument, but it may have merit as one of the Core Web Vitals, Cumulative Layout Shift, aims to measure how much elements move around during a page’s lifetime. And the more they move the worse the visitor’s experience is presumed to be.

As a counterpoint lets examine the experience that anti-flicker snippets deliver.

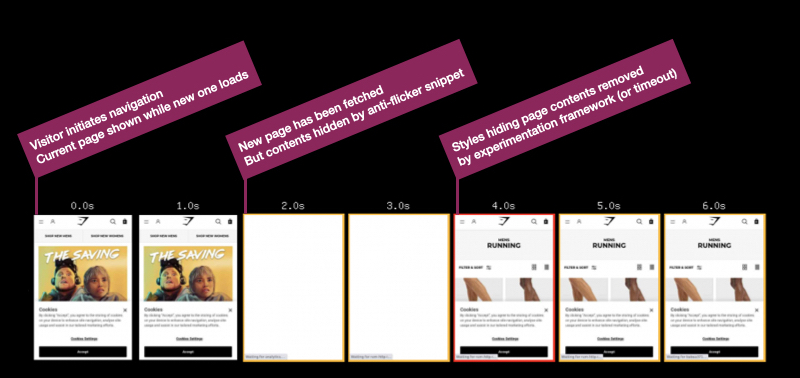

Here’s a filmstrip from WebPageTest that simulates a visitor navigating from Gymshark’s home page to a category page.

Gymshark – navigating from home to category page – London, Chrome, Cable

There are some blank frames in the middle of the filmstrip where the anti-flicker snippet hides the page for a few seconds (it’s actually 1.7s if you use the link above to view the test result)

By default Google Optimize uses a timeout of 4 seconds so in this case we can determine that the experimentation script completed before the timeout.

Compare this to a test where the anti-flicker snippet has been removed (using a Cloudflare Worker) and we can see the page renders progressively so at least in this case hiding the page doesn’t add to the visitors experience.

The blank frames also indicate to visitors that they may be part of an experiment, whether they realise this or not they may be aware the experience is different compared to other sites.

When the experimentation script doesn’t finish execution before the timeout expires then the visitor will get the ‘worst of both worlds’ – they’ll see a blank screen for a long time and then potentially see the effect of the experiment as it executes.

This might be because a visitor has a slower device or network connection, or the experimentation script being large and so taking too long to fetch and execute, or because other scripts in the page are delaying the experimentation scripts.

What we don’t know is how long visitors are staring at a blank screen!

Measuring the Anti-Flicker Snippet

Ideally, the experimentation frameworks would communicate when key events occur perhaps by firing events, posting messages or creating User Timing marks and measures.

But in common with many other 3rd-party tags they seem reluctant to do this, so we have to create our own methods for measuring them.

All three examples below use the MutationObserver API to track when either the class that hides the document, or the style element with the relevant styles in is removed (different products adopt slightly different approaches to hiding the page).

Each example sets a User Timing mark, named anti-flicker-end, when the anti-flicker styles are removed.

How long the page is hidden could be measured by adding a start mark to the snippet that hides the page and then using performance.measure to calculate the elapsed duration.

Some RUM products can be configured to collect the marks and measures created, others rely on explicitly calling their API (as do analytics products).

In my testing so far I’ve found the measurement scripts almost match the blank periods in WebPageTest filmstrips but they often measure a couple hundred milliseconds before the blank period actually ends. This isn’t surprising as once the styles have been updated, the browser still has to layout and render the page. In the future perhaps in the Element Timing might provide a more accurate measurement.

Although I’m working towards deploying these with a client, we’ve not deployed it yet, so treat them as prototypes and test them in your own environment!

Also I spend more time reading other people’s code than writing my own, so feel free to suggest ways to improve the measurement snippets, checks for Mutation Observer support could be added for example.

I’ve created a GitHub repository to track the scripts (I plan on adding more for other 3rd-parties) and pull requests are very welcome!

- Google Optimize

Google Optimize adds an async-hide class to the html element so the script detects when this class is removed.

const callback = function(mutationsList, observer) {

// Use traditional 'for loops' for IE 11

for(const mutation of mutationsList) {

if(!mutation.target.classList.contains('async-hide') && mutation.attributeName === 'class' && mutation.oldValue.includes('async-hide')) {

performance.mark('anti-flicker-end');

observer.remove();

break;

}

}

};

const observer = new MutationObserver(callback);

const node = document.getElementsByTagName('html')[0];

observer.observe(node, { attributes: true, attributeOldValue: true });

- Adobe Target

Adobe Target adds a style element with an id of at-body-style and the script below detects when this element is removed

const callback = function(mutationsList, observer) {

// Use traditional 'for loops' for IE 11

for(const mutation of mutationsList) {

for(const node of mutation.removedNodes) {

if(node.nodeName === 'STYLE' && node.id === 'at-body-style') {

performance.mark('anti-flicker-end');

observer.disconnect();

break;

}

}

}

};

const observer = new MutationObserver(callback);

const node = document.getElementsByTagName('head')[0];

observer.observe(node, { childList: true });

- Visual Web Optimizer

VWO adds a style element with an id of _vis_opt_path_hides and as with Adobe Target the script detects when this element is removed.

During testing I also observed VWO add other temporary styles to hide other page elements too.

const callback = function(mutationsList, observer) {

// Use traditional 'for loops' for IE 11

for(const mutation of mutationsList) {

for(const node of mutation.removedNodes) {

if(node.nodeName === 'STYLE' && node.id === '_vis_opt_path_hides') {

performance.mark('anti-flicker-end');

observer.disconnect();

break;

}

}

}

};

const observer = new MutationObserver(callback);

const node = document.getElementsByTagName('head')[0];

observer.observe(node, { childList: true });

Anti-Flicker Snippets are a Symptom of a Larger Issue

Once we’ve started collecting data on how long the page is hidden for, we can experiment with reducing the timeout, or even removing the anti-flicker snippet completely.

After all, these are experimentation tools so we should experiment with how their implementation affects visitors’ experience and behaviour!

But, fundamentally, the anti-flicker snippet is a symptom of a larger issue, and that issue is that testing tools finish their execution too late.

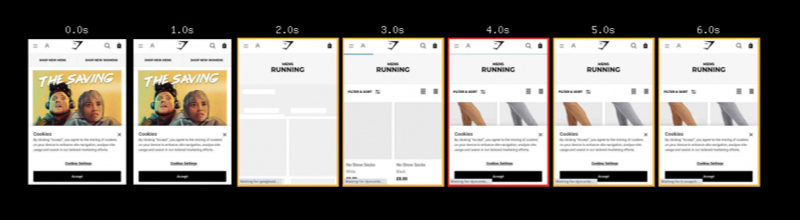

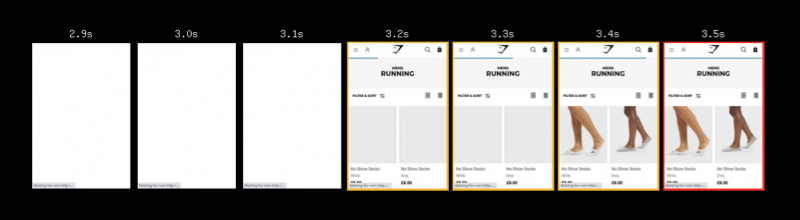

Revisiting the first Gymshark test and zooming into the filmstrip at 100ms frame interval we can see the page was revealed at 3.2s.

Gymshark – navigating from home to category page – London, Chrome, Cable

Or to view it another way… Google Optimize finishes execution at around 3.2s… and as Largest Contentful Paint needs to happen within 2.5s to be considered ‘good’, this suggests the delays caused by experimentation tools may be testing our visitors’ patience.

(In Gymshark’s case there are some other factors that further contribute to the delay too)

Shrinking the size of the testing script will help to reduce how long it takes to fetch and execute, and I mentioned some factors that can help with this in Reducing the Site-Speed Impact of Third-Party Tags.

The size of tags for testing services often depends on the number of experiments included, number of visitor cohorts, page URLs, sites etc. and reducing these can reduce both the download size and the time it takes to execute the script in the browser.

Out of data experiments or A/A tests that are being used as workarounds for CMS issues or development backlogs, and experiments for different sites (staging and live etc.) in the same tag are some of the aspects I look for first.

Recently I came across an example where base64 encoded images were being included in the testing scripts, making them huge, so avoid that too!

But there’s also another challenge…

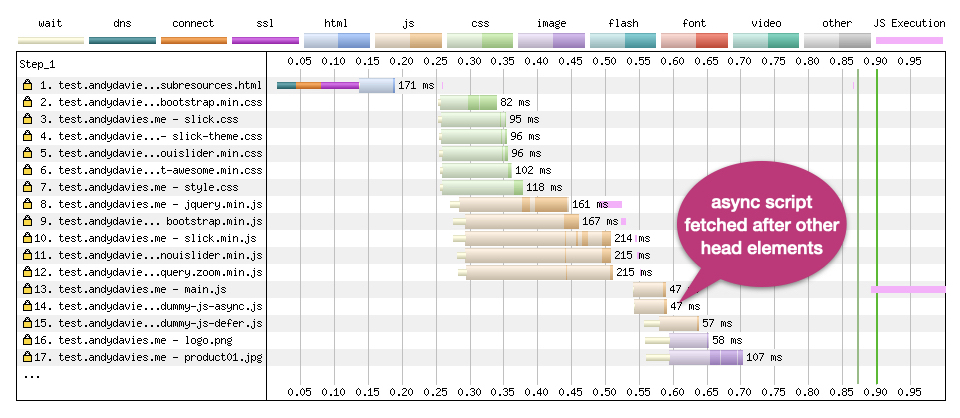

Vendors recommend anti-flicker snippets because their testing scripts are loaded asynchronously – so the browser can continue building the page while the script is being fetched – and browsers, particularly Chrome, deprioritise async scripts.

In Chrome, this deprioritisation means the fetch (even from cache) of asynchronous scripts in the head is delayed into the second-phase of the page load.

An example of this can be seen in the waterfall below, where request 14 has been deprioritised because it’s async.

Prioritisation Test Page – Dulles, Chrome, Cable

So not only are the scripts finishing too late they’re also starting too late!

To compound the delay some testing scripts are loaded via a Tag Manager, and as Simo Ahava demonstrated with Google Optimize this increases the delay even further.

The alternative, at least for client-side testing, is to adopt a blocking script approach that products like Optimizely, and Maxymiser use.

But then we face the issue that the browser must wait for the testing script to be fetched and executed before it can continue building the page, and if the script host is inaccessible that can be a long wait.

We’re stuck between ‘a rock and a hard place’!

There is a third option… and that’s to create the page variants server-side before the HTML is even received by the browser.

Unfortunately too few publishing and ecommerce platforms have built-in support for experimentation so implementing this isn’t as easy as the current client-side options.

Some Content Delivery Networks (CDNs) already have the capability to provide test variants from their nodes, and as Edge Computing offerings from Akamai, Cloudflare and Fastly et al. mature I expect to see AB / MV Testing vendors offer ‘experimentation at the edge’ as a capability.

Several of the testing vendors currently support server-side testing but they still depend on sites to do the heavy lifting of implementing variants themselves.

Closing Thoughts

The default timeout values on anti-flicker snippets are set way too high (3 seconds plus) especially when we consider the limits that are placed on metrics like Largest Contentful Paint.

If you’re using anti-flicker snippets as part of your experimentation toolset, you should measure how long visitors are being shown a blank screen, with the aim of reducing the timeout values or even removing the anti-flicker snippet completely.

In his post on measuring the impact of Google Optimize’s anti-flicker snippet, Simo highlights some of the events that Google Optimize exposes during its lifecycle and these can be used as hooks for getting a more complete picture of when tests are running and their impact on your visitors experience.

If your vendor doesn’t expose timings or events for key milestones, point them at Google Optimize as a competitor and ask them to. It really is unacceptable that 3rd-parties tags don't already expose this data.

As ever with client-side scripts reducing their size will reduce their impact on site speed so monitor their size, and clean them up regularly.

Fundamentally we need to move this work out of the browser so track what your vendors are doing to support server-side experiments, particularly when it comes to integration with the CDNs Edge Compute platforms as this is going to be the most practical way for many sites to implement this.

Further Reading

Exploring Site Speed Optimisations With WebPageTest and Cloudflare Workers, Andy Davies, Sep 2020

Google Optimize Anti-flicker Snippet Delay Test, Simo Ahava, May 2020

JavaScript Loading Priorities in Chrome, Addy Osmani, Feb 2019

Reducing the Site-Speed Impact of Third-Party Tags, Andy Davies, Oct 2020

Simple Way To Measure A/B Test Flicker Impact, Simo Ahava, May 2020

Timing Snippets for 3rd-Party Tags