"Note that WebKit already partitions caches and HTML5 storage for all third-party domains." - Intelligent Tracking Prevention

Seems a pretty innocuous note but…

What this means is Safari caches content from third-party origins separately for each document origin, so for example if two sites, say a.com and b.com both use a common library, third-party.com/script.js, then script.js will be cached separately for both sites.

And if someone has an 'empty' cache and visits the first site and then the other, script.js will be downloaded twice.

Malte Ubl was the first person I saw mention this back in April 2017 but it appears this has been Safari's behaviour since 2013

So how much should we worry about Safari's behaviour from a performance perspective?

Checking Safari's Behaviour

Apart from the note in the WebKit post there's little documented detail on Safari's behaviour.

So I decided to check it for myself…

Using the HTTP Archive I found two sites that included the same resource from one of the public JavaScript Content Deliver Networks (CDN).

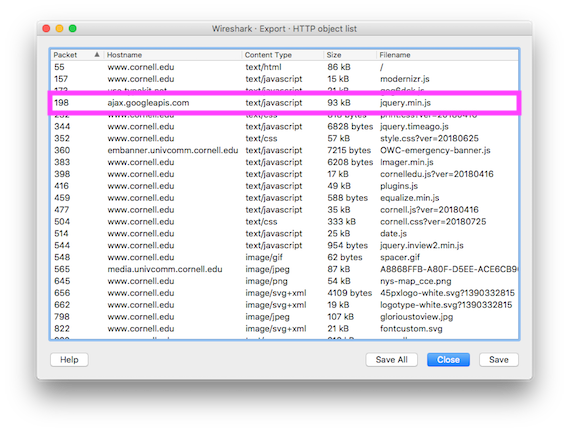

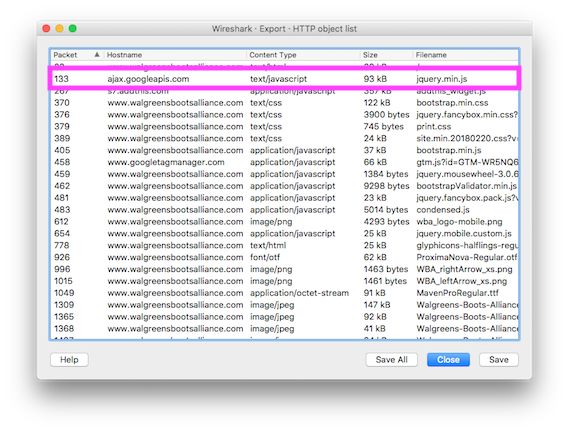

In this case I chose Cornell University and Walgreens Boots Alliance as they both include jQuery 1.10.2 over HTTP from ajax.googleapis.com

Then I visited the sites one after the other using Safari on iOS 11.4.1 and captured the network traffic with tcpdump

Sure enough when the packet capture is viewed in Wireshark it's clear that even though the pages were loaded immediately after each other, jQuery is requested over the network for each page load.

www.cornell.edu loading jQuery from ajax.googleapis.com

www.walgreensbootsalliance.com loading jQuery from ajax.googleapis.com

Repeating the tests with two sites that used HTTPS – https://www.feelcycle.com/ and https://www.mazda.no/ produced similar results.

Cache partitioning is aimed at defending against third-parties tracking visitors across multiple sites e.g. via cookies, another mechanism that can also be used to track visitors is TLS Session resumption – see Tracking Users across the Web via TLS Session Resumption for more detail.

And it appears when loading the second site (Mazda) the TLS connection to ajax.googleapis.com was resumed using information from the first so perhaps there are limits to Intelligent Tracking Protection's current capabilities and further enhancements to come.

(I used Wireshark as I wanted to see the raw network traffic but you can repeat my tests in Safari DevTools)

What's the Performance Impact?

In theory using common libraries, fonts etc. from a public CDN provides several benefits:

reduced hosting costs for sites on a tight budget – Troy Hunt highlights how it reduces the cost of running ';--have i been pwned? in 10 things I learned about rapidly scaling websites with Azure.

improved performance as the resource is hosted on a CDN (closer to the visitor), and in theory there's the possibility the resource may already be cached from an earlier visit to another site that used the same resource.

For a while I've been skeptical about shared caching (in the browser) and particularly whether it occurs often enough to deliver benefits.

Shared Caching

In 2011, Steve Webster questioned whether enough sites shared the same libraries for the caching benefits to exist and current HTTP Archive data shows sites are still using diverse versions of common libraries.

The March 2018 HTTP Archive (desktop) run has data for approximately 466,000 pages and the most popular public library, jQuery 1.11.3 from ajax.googleapis.com (served over HTTPS), is used by just over 1% of them.

I'm not sure what level adoption needs to reach for shared caching to achieve critical mass but 1% certainly seems unlikely to be high enough and even Google's most popular font – OpenSans – is only requested by around 9% of pages in the HTTP Archive.

Of course if more sites use the same version of a library, from the same public CDN and over the same scheme then the probability of the library being in cache increases.

But even if the third-party resource is used across a critical mass of sites it still has to stay in the cache long enough for it to be there when the next site requests it.

And research by both Yahoo and Facebook demonstrated that resources don't live for as long as we might expect in the browser’s cache.

So if content from some of the most popular sites only lives in the browser cache for a short time what hope do the rest of us have?

Benefits of Content Delivery Networks (CDNs)

The other performance aspect a public CDN brings is the reduction in latency – by moving the resource closer to the visitor there should be less time spent waiting for it to download.

Of course the overhead of creating a connection to a new origin (TCP connection / TLS negotiation etc.) needs to be balanced against the potentially faster download times due to reduced latency.

Most of the clients I deal with already use a CDN so they're already gaining the benefits of the reduced latency.

Self-hosting a library has some other advantages too – it removes the dependency on someone else's infrastructure from both reliablity and security perspectives, if your site is using HTTP/2 then the request can be prioritised against the other resources from the origin, or if your site is still using HTTP/1.x then the TCP connection can reused for other requests (reducing the overall connection overhead, and taking advantage of a growing congestion window).

Overall I'm still skeptical that the shared caching delivers a meaningful benefit for sites already using a CDN and encourage clients to host libraries themselves rather than use a public CDN.

Test it for Yourself

As ever with performance related changes it's worth testing the difference between self-hosting a library vs using it from a public CDN, and there are several approaches for this.

Page Level

A combination of split testing – serving a portion of visitors the library from a public CDN, and others the self-host version – is perhaps the simplest method for determining which approach is faster.

Coupled with Real User Monitoring (RUM) to measure page performance, we can explore how the two different approaches affects the key milestones – FirstMeaningfulPaint, DOMContentLoaded, onLoad, or a custom milestone (using User Timing API) as appropriate – across a whole visitor base.

Resource Level

To explore performance in more depth we can use the Resource Timing API to measure the actual times of the third-party library across all visitors and then beacon the timings to RUM or another service for analysis.

Cached or Not?

For browsers that support the Resource Timing (Level 2) API i.e. Chrome & Firefox, it's possible to determine whether a resource was requested over the network or whether a cached copy was used.

Resource Timing (Level 2) includes three attributes describing the size of a resource – transferSize, encodedBodySize and decodedBodySize and Ben Maurer's post to Chromium net-dev illustrates how these attributes can be used to understand whether a resource is cached (or not).

if (transferSize == 0)

retrieved from cached

else if (transferSize < encodedBodySize)

cached but revalidated

else

uncachedBy default Resource Timing has restrictions on the attributes that are populated when the resource is retrieved from a third-party origin, and the size attributes will only be available if the third-party grants access via the Timing-Allow-Origin header.

So the example above needs updating to account for third-parties that don't allow access to the size information and luckily Nic Jansma has already suggested some approaches for tackling this.

First Page in a Session vs Later Ones

Shared caching should have the most impact on the first page in a session (with subsequent pages benefiting from the first page retrieving and caching common resources) so ideally we want to be able to differentiate data for the initial page from the later pages in a session.

One way of doing this might be store a timestamp in local storage, or a session cookie, updating the timestamp on each page view - when the cookie doesn't exist, or the timestamp is too old consider the session to be new, otherwise consider it to be the continuation of an existing session.

This isn't a perfect approach for separating identifying the first page in a session but it's probably close enough.

Final Thoughts

Given the growth in 3rd parties tracking us across the web, mechanisms that improve our privacy are to be applauded even if they lead to a theoretical decrease in performance.

It's unclear how real the decrease in performance is - I'm pretty skeptical that any common library from one of the public CDNs is used widely enough for the shared caching benefits to be seen, but as with all things performance we should 'measure it, not guess it'.

(Thanks to Doug and Yoav for reviewing drafts of this post and suggesting improvements)

Further Reading

Intelligent Tracking Prevention, WebKit, 2017

Optionally partition cache to prevent using cache for tracking, WebKit, 2013

Getting a Packet Trace, Apple, 2016

Tracking Users across the Web via TLS Session Resumption, Erik Sy, Christian Burkert, Hannes Federrath, Mathias Fischer, 2018

Caching and the Google AJAX Libraries, Steve Webster, 2011

Web performance: Cache efficiency exercise, Facebook, 2015

Performance Research, Part 2: Browser Cache Usage - Exposed!, Yahoo, 2007

User Timing API, W3C, 2013

Resource Timing Level 2 API, W3C, 2018 (Working Draft)

Local cache performance, Ben Maurer - Facebook, 2016

ResourceTiming in Practice, Nic Jansma, 2015 updated 2018